How to Setup PyCharm to Run PySpark Jobs

This post will guide you to a step-by-step setup to run PySpark jobs in PyCharm

This post will give a walk through of how to setup your local system to test PySpark jobs. Followed by demo to run the same code using spark-submit command.

Prerequisites

- PyCharm (download from here)

- Python (Read this to Install Scala)

- Apache Spark (Read this to Install Spark)

Let’s Begin

Clone my repo from GitHub for a sample WordCount in PySpark.

Import the cloned project to PyCharm

File –> Open –> path_to_project

Open

~/.bash_profileand add the below lines.#Spark Home #export SPARK_HOME=/Users/pavanpkulkarni/Documents/spark/spark-2.3.0-bin-hadoop2.7 export SPARK_HOME=/Users/pavanpkulkarni/Documents/spark/spark-2.2.1-bin-hadoop2.7 export PATH=$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbin export PYTHONPATH=$SPARK_HOME/python/:$PYTHONPATH export PYTHONPATH=$SPARK_HOME/python/lib/py4j-0.10.4-src.zip:$PYTHONPATHsource the

~/.bash_profileto reflect the changes.source ~/.bash_profileInstall pyspark and pypandoc as shown below.

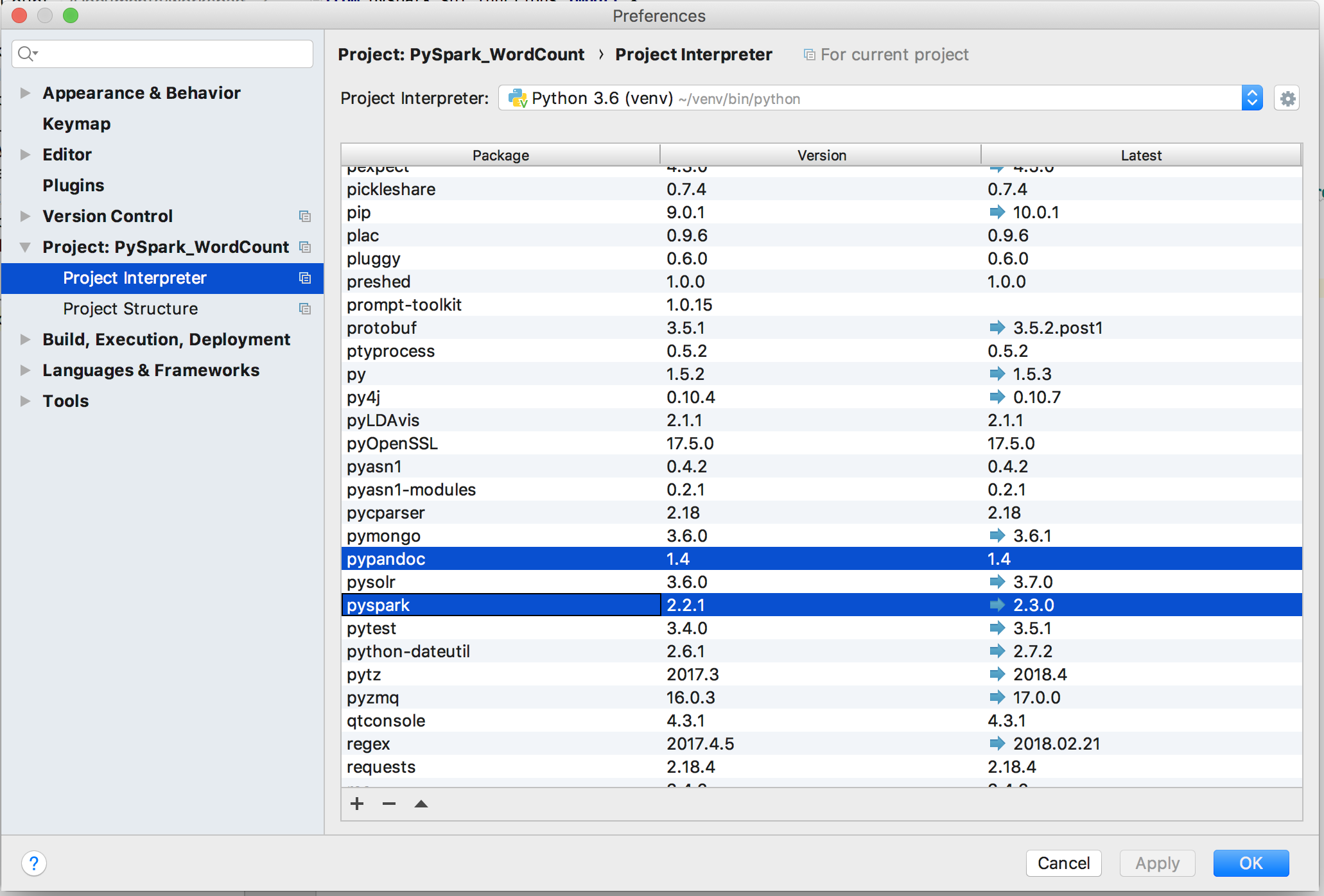

PyCharm –> Preferences –> Project Interpreter

Go to PyCharm –> Preferences –> Project Interpreter. Click on

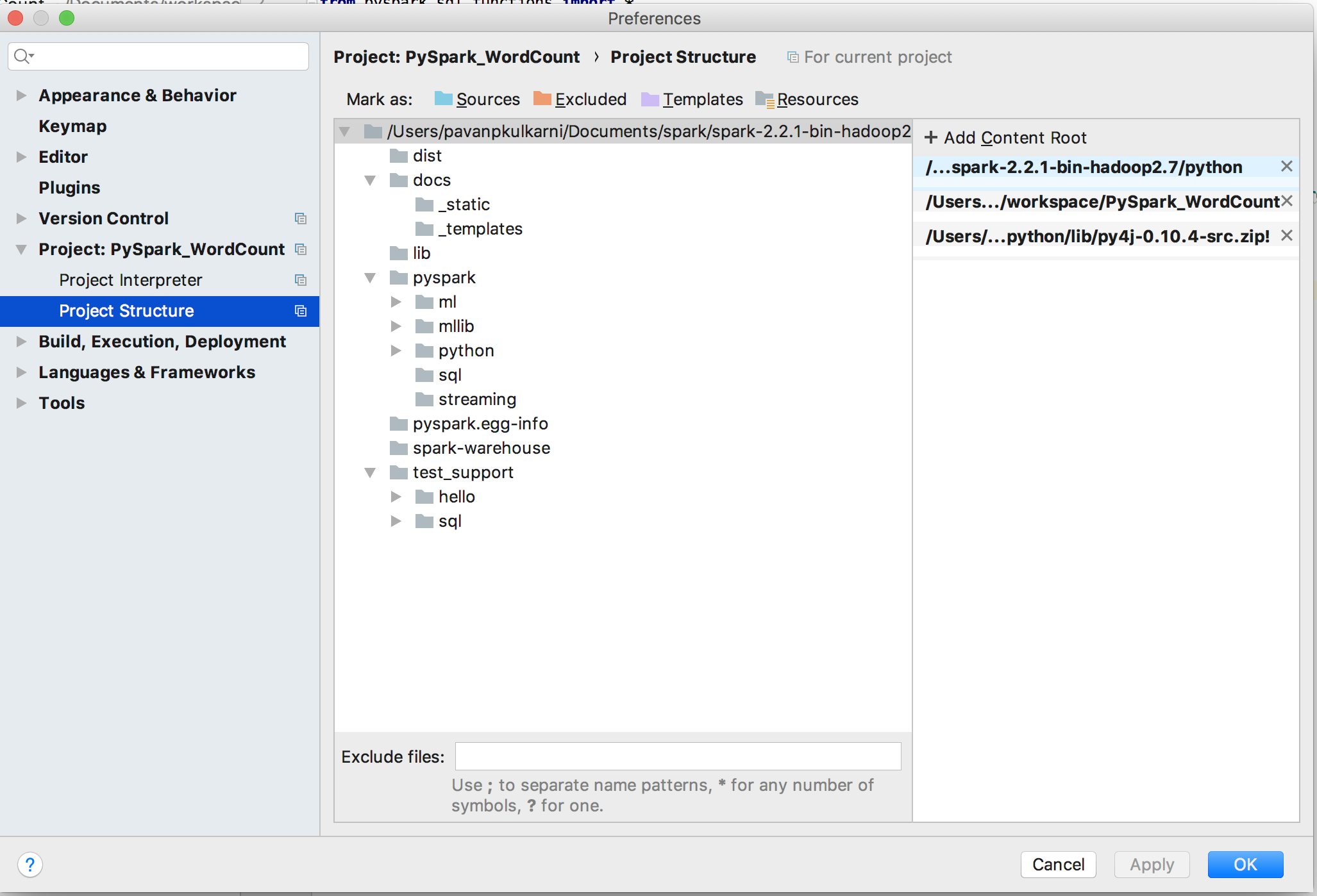

Add Content Root. Here you need to add paths/Users/pavanpkulkarni/Documents/spark/spark-2.2.1-bin-hadoop2.7/python /Users/pavanpkulkarni/Documents/spark/spark-2.2.1-bin-hadoop2.7/python/lib/py4j-0.10.4-src.zip.

Restart PyCharm.

Run the project. You should be able to see output.

/Users/pavanpkulkarni/venv/bin/python /Users/pavanpkulkarni/Documents/workspace/PySpark_WordCount/com/pavanpkulkarni/wordcount/PysparkWordCount.py Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties Setting default log level to "WARN". To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel). 18/04/27 15:00:16 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable +---------+-----+ | word|count| +---------+-----+ | ...| 5| | July| 2| | By| 1| | North,| 1| | taking| 1| | harry| 18| | #TBT| 1| | Potter:| 3| |character| 1| | 7| 2| | Phoenix| 1| | Number| 1| | day| 1| | (Video| 1| | seconds)| 1| | Hermione| 3| | Which| 1| | did| 1| | Potter| 38| |Voldemort| 1| +---------+-----+ only showing top 20 rows Word Count : None Process finished with exit code 0

Run PySpark with spark-submit.

Open terminal and type

Pavans-MacBook-Pro:PySpark_WordCount pavanpkulkarni$ spark-submit com/pavanpkulkarni/wordcount/PysparkWordCount.pyOutput :

Pavans-MacBook-Pro:PySpark_WordCount pavanpkulkarni$ spark-submit com/pavanpkulkarni/wordcount/PysparkWordCount.py Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties 18/04/27 15:06:32 INFO SparkContext: Running Spark version 2.2.1 18/04/27 15:06:32 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 18/04/27 15:06:32 INFO SparkContext: Submitted application: WordCount 18/04/27 15:06:32 INFO SecurityManager: Changing view acls to: pavanpkulkarni 18/04/27 15:06:32 INFO SecurityManager: Changing modify acls to: pavanpkulkarni 18/04/27 15:06:32 INFO SecurityManager: Changing view acls groups to: 18/04/27 15:06:32 INFO SecurityManager: Changing modify acls groups to: 18/04/27 15:06:32 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(pavanpkulkarni); groups with view permissions: Set(); users with modify permissions: Set(pavanpkulkarni); groups with modify permissions: Set() 18/04/27 15:06:33 INFO Utils: Successfully started service 'sparkDriver' on port 60536. 18/04/27 15:06:33 INFO SparkEnv: Registering MapOutputTracker 18/04/27 15:06:33 INFO SparkEnv: Registering BlockManagerMaster 18/04/27 15:06:33 INFO BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information 18/04/27 15:06:33 INFO BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up 18/04/27 15:06:33 INFO DiskBlockManager: Created local directory at /private/var/folders/nb/ygmwx13x6y1_9pyzg1_82w440000gn/T/blockmgr-67bf5d7f-1b6a-4ce0-9795-b11d17618bd3 18/04/27 15:06:33 INFO MemoryStore: MemoryStore started with capacity 366.3 MB 18/04/27 15:06:33 INFO SparkEnv: Registering OutputCommitCoordinator 18/04/27 15:06:33 INFO Utils: Successfully started service 'SparkUI' on port 4040. 18/04/27 15:06:33 INFO SparkUI: Bound SparkUI to 0.0.0.0, and started at http://10.0.0.67:4040 18/04/27 15:06:33 INFO SparkContext: Added file file:/Users/pavanpkulkarni/Documents/workspace/PySpark_WordCount/com/pavanpkulkarni/wordcount/PysparkWordCount.py at file:/Users/pavanpkulkarni/Documents/workspace/PySpark_WordCount/com/pavanpkulkarni/wordcount/PysparkWordCount.py with timestamp 1524855993821 18/04/27 15:06:33 INFO Utils: Copying /Users/pavanpkulkarni/Documents/workspace/PySpark_WordCount/com/pavanpkulkarni/wordcount/PysparkWordCount.py to /private/var/folders/nb/ygmwx13x6y1_9pyzg1_82w440000gn/T/spark-711b082d-dde7-474b-b370-f046fc4bdf04/userFiles-cf6b481c-9b81-41b4-b929-a20d39e9f4cd/PysparkWordCount.py 18/04/27 15:06:33 INFO Executor: Starting executor ID driver on host localhost 18/04/27 15:06:33 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 60537. 18/04/27 15:06:33 INFO NettyBlockTransferService: Server created on 10.0.0.67:60537 18/04/27 15:06:33 INFO BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy 18/04/27 15:06:33 INFO BlockManagerMaster: Registering BlockManager BlockManagerId(driver, 10.0.0.67, 60537, None) 18/04/27 15:06:33 INFO BlockManagerMasterEndpoint: Registering block manager 10.0.0.67:60537 with 366.3 MB RAM, BlockManagerId(driver, 10.0.0.67, 60537, None) 18/04/27 15:06:33 INFO BlockManagerMaster: Registered BlockManager BlockManagerId(driver, 10.0.0.67, 60537, None) 18/04/27 15:06:33 INFO BlockManager: Initialized BlockManager: BlockManagerId(driver, 10.0.0.67, 60537, None) 18/04/27 15:06:34 INFO SharedState: Setting hive.metastore.warehouse.dir ('null') to the value of spark.sql.warehouse.dir ('file:/Users/pavanpkulkarni/Documents/workspace/PySpark_WordCount/spark-warehouse/'). 18/04/27 15:06:34 INFO SharedState: Warehouse path is 'file:/Users/pavanpkulkarni/Documents/workspace/PySpark_WordCount/spark-warehouse/'. 18/04/27 15:06:34 INFO StateStoreCoordinatorRef: Registered StateStoreCoordinator endpoint 18/04/27 15:06:36 INFO FileSourceStrategy: Pruning directories with: 18/04/27 15:06:36 INFO FileSourceStrategy: Post-Scan Filters: 18/04/27 15:06:36 INFO FileSourceStrategy: Output Data Schema: struct<value: string> 18/04/27 15:06:36 INFO FileSourceScanExec: Pushed Filters: 18/04/27 15:06:36 INFO ContextCleaner: Cleaned accumulator 1 . . . . 18/04/27 15:06:38 INFO TaskSetManager: Finished task 3.0 in stage 5.0 (TID 6) in 62 ms on localhost (executor driver) (8/8) 18/04/27 15:06:38 INFO TaskSchedulerImpl: Removed TaskSet 5.0, whose tasks have all completed, from pool 18/04/27 15:06:38 INFO DAGScheduler: ResultStage 5 (showString at NativeMethodAccessorImpl.java:0) finished in 0.062 s 18/04/27 15:06:38 INFO DAGScheduler: Job 2 finished: showString at NativeMethodAccessorImpl.java:0, took 0.070147 s +---------+-----+ | word|count| +---------+-----+ | ...| 5| | July| 2| | By| 1| | North,| 1| | taking| 1| | harry| 18| | #TBT| 1| | Potter:| 3| |character| 1| | 7| 2| | Phoenix| 1| | Number| 1| | day| 1| | (Video| 1| | seconds)| 1| | Hermione| 3| | Which| 1| | did| 1| | Potter| 38| |Voldemort| 1| +---------+-----+ only showing top 20 rows Word Count : None 18/04/27 15:06:38 INFO SparkUI: Stopped Spark web UI at http://10.0.0.67:4040 18/04/27 15:06:38 INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped! 18/04/27 15:06:38 INFO MemoryStore: MemoryStore cleared 18/04/27 15:06:38 INFO BlockManager: BlockManager stopped 18/04/27 15:06:38 INFO BlockManagerMaster: BlockManagerMaster stopped 18/04/27 15:06:38 INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped! 18/04/27 15:06:38 INFO SparkContext: Successfully stopped SparkContext 18/04/27 15:06:38 INFO ShutdownHookManager: Shutdown hook called 18/04/27 15:06:38 INFO ShutdownHookManager: Deleting directory /private/var/folders/nb/ygmwx13x6y1_9pyzg1_82w440000gn/T/spark-711b082d-dde7-474b-b370-f046fc4bdf04 18/04/27 15:06:38 INFO ShutdownHookManager: Deleting directory /private/var/folders/nb/ygmwx13x6y1_9pyzg1_82w440000gn/T/spark-711b082d-dde7-474b-b370-f046fc4bdf04/pyspark-f0149541-b9b5-4fc9-b5d6-08ae6ce63968 Pavans-MacBook-Pro:PySpark_WordCount pavanpkulkarni$

Share this post

Twitter

Google+

Facebook

LinkedIn

Email